Getting the most out of your forage evaluation: Understanding NDF digestibility

Digestible fiber is important for dairy cattle productivity, but how can we quantify it?

Transforming fiber into energy and protein for human nutrition is a key role of ruminants in our food system. Furthermore, producing and utilizing high-quality forages is a key determinant of dairy farm profitability. Analyses that help farms assess forage quality are widely used and heavily influence ingredient purchasing and diet formulation for dairy cattle.

Neutral detergent fiber (NDF) is a measure of the proportion of cell wall in plant tissue, expressed as a proportion of total dry matter. Adequate NDF in dairy cow diets enables proper rumen function and rumen mat formation, but the challenge of feeding increasing NDF concentrations to high-producing dairy cows is that it may lead to excessive gut fill and limit feed intake.

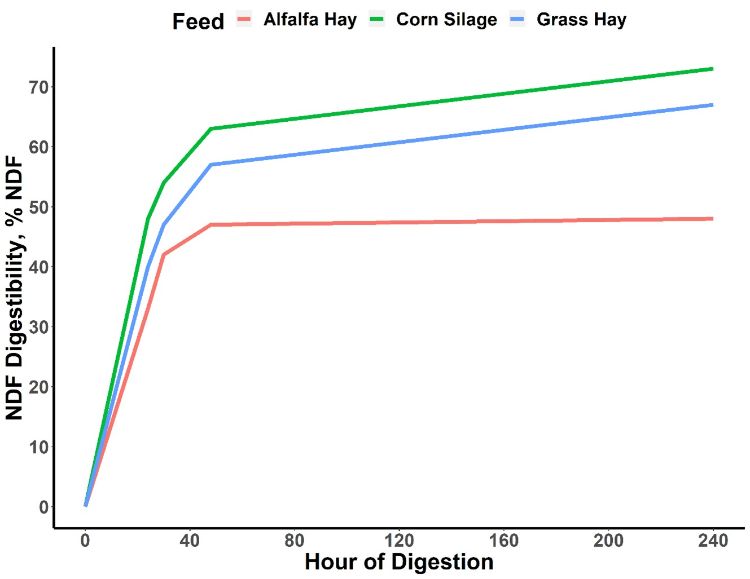

To strike the right balance between feeding enough NDF to support rumen function without feeding so much that it limits feed intake, dairies have become increasingly reliant on in vitro NDF digestibility (ivNDFD) measures. The ivNDFD is the proportion of NDF that is digested after some amount of time (24, 30, 48, or 240 hours) in a flask with buffered rumen fluid (containing live ruminal bacteria) from a donor cow. Undigested NDF (uNDF) is a related measure and is simply the portion of NDF that was not digested after some time (usually 240 hours) of in vitro fermentation. Typically, both ivNDFD and uNDF are expressed as a proportion of NDF within the feedstuff, such that ivNDFD + uNDF = 100%.

Feed analysis laboratories offer these NDF digestibility assays, but they are time-consuming for the lab and therefore costly. To reduce the cost of acquiring these estimates, many laboratories offer near infrared reflectance spectroscopy (NIRS) predictions of ivNDFD as an alternative to “wet chemistry.”

Near infrared assessment of NDFD

NIRS instruments bounce specific wavelengths of light off feed samples and measure the light absorbed or reflected back. The patterns of absorption and reflectance are indicative of the chemical composition of the feed material tested. To make use of these patterns, reference feeds are analyzed both with wet chemistry methods and by NIRS, and prediction equations are built from those relationships.

When a sample is sent in from a farm, then, these equations are used to take NIRS results and predict chemical properties (in this case, NDFD). This means that NIRS analyses are only as strong as the reference database underlying the predictions. A calibration database with many thousands of samples that are similar to the sample you would like to analyze should result in accurate NIRS values. However, it is also important to note that NIRS analyses cannot be more accurate than wet chemistry analysis from that particular lab.

For proximate nutrients (DM, CP, NDF, starch), NIRS performs well because the wet chemistry methods are highly standardized. Accuracy is diminished for estimates of feed digestibility because the variability in the wet chemistry assay itself makes it more difficult to generate accurate equations. Variability in rumen fluid composition from donor cows is a large part of this variability. Also, NIRS may be inaccurate for any feed constituent if composition of the forage sample is outside the normal range. This is because the unique chemical makeup of such feeds may not be well represented in the reference database.

Reference range errors can be caused by use of uncommon forage types or by use of common forages that were stressed during growth by drought, heat, flooding, or pests. Correct forage identification is also critical if NIRS is being used because prediction equations for NIRS analysis are specific to forage type (i.e., alfalfa vs. grass vs. mixed hay). Therefore, for NIRS analysis to be as accurate as possible, it’s critical to make sure your feed sample is properly labeled and defined.

Best practices for NDFD analysis

To get the most value from your investment in NDFD analysis, keep these key points in mind:

- Use a forage testing laboratory that is certified by the National Forage Testing Association (NFTA), This voluntary certification program monitors testing accuracy among laboratories (click here for a list of certified labs).

- Use the same laboratory for all your analyses. Different labs have different technicians, slightly different methods and materials, and different cows providing rumen fluid, so results from different labs should not be directly compared.

- Only compare ivNDFD within feed type. For example, alfalfa hay may have 40-45 % ivNDFD at 30 hours while grass hays may have ivNDFD >50%. That doesn’t mean the grass hay is “better” than the alfalfa hay; they behave very differently in the rumen of a cow. The utility of ivNDFD analyses is to make decisions within a feed type.

- Compare feeds based on the same time point. Make sure you’re comparing apples to apples – comparing a 30-hour ivNDFD to 48-hour ivNDFD is inherently biased because the samples were digested for different amounts of time.

- Avoid using NIRS analysis for unusual samples. For reasonably normal corn silage or alfalfa haylage samples, reputable feed analysis laboratories can generate reliable results using NIRS. However, forages that are not widely used or silages with unusual characteristics may not be well represented in a reference database and therefore may not be accurately assessed by NIRS.

Print

Print Email

Email